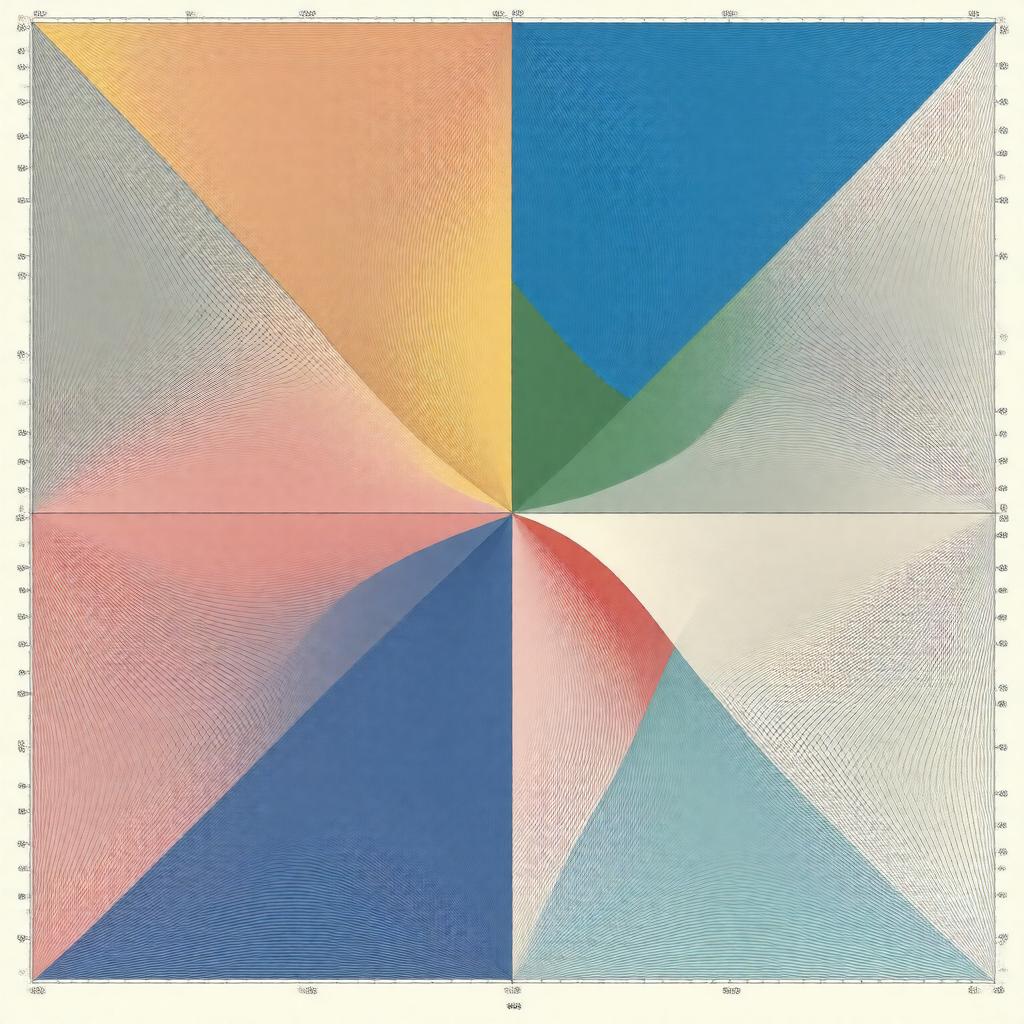

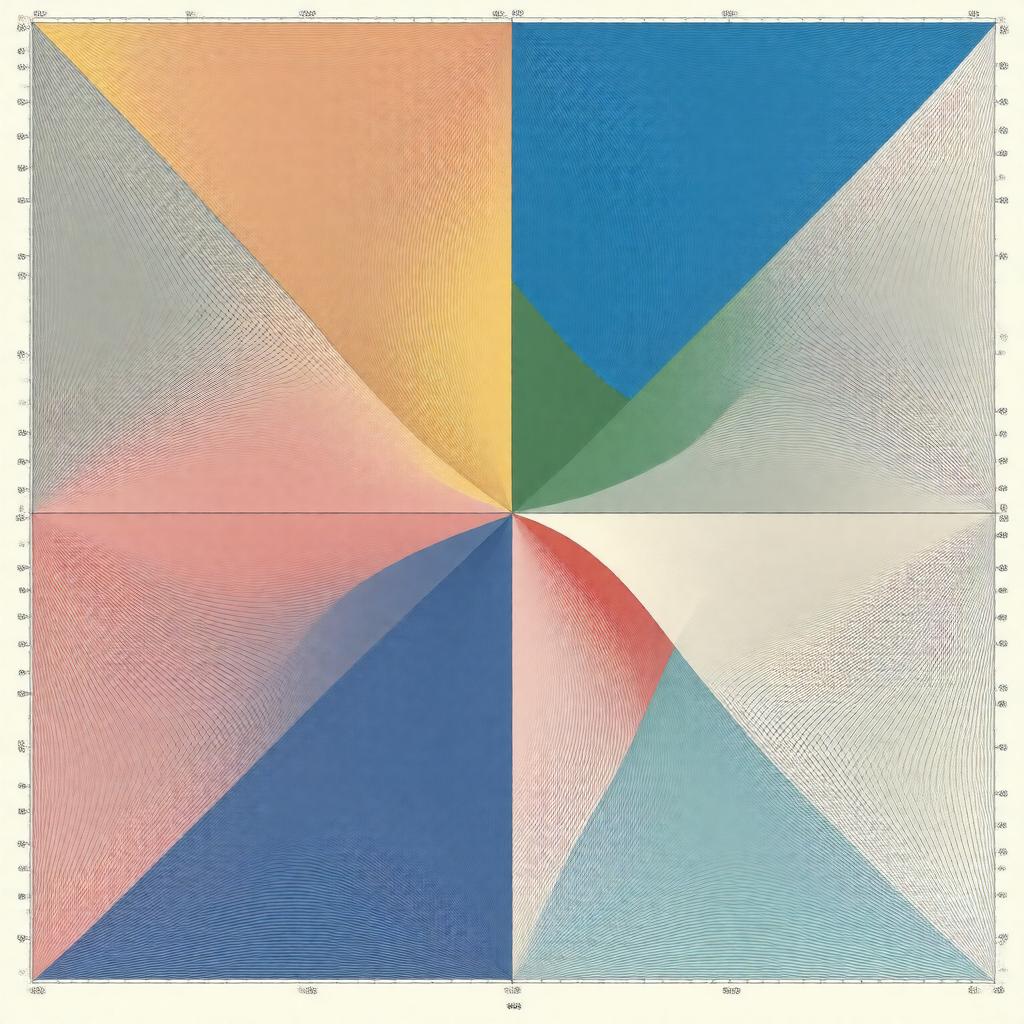

Prompt

"Generate an image representing the concept of Kullback–Leibler divergence, a statistical distance between two probability distributions. Depict a stylized, abstract representation of two overlapping probability distributions, with a divergence or distance metric visualized between them, possibly using a combination of colors, curves, and mathematical notation, such as the formula D_{KL}(P || Q) = \\sum_i P(i) \\log \\frac{P(i)}{Q(i)}. Incorporate elements that evoke a sense of information theory, statistics, and machine learning, while maintaining a clean and simple composition."